Most of our day-to-day job is learned through mentorship and experience and not based upon scientific research. Once a dogma has permeated a significant minority of practitioners, it becomes very hard to challenge it.

Yet, in this post, I’ll attempt to not only challenge that sometimes tests must be ordered but prove that in different use-cases.

Your tests shall not be ordered (shall they?)

Some of my conference talks are more or less related to testing, and I never fail to point out that TestNG is superior to JUnit if only because it allows for test method ordering. At that point, I’m regularly asked at the end of the talk why method ordering matters. It’s a widespread belief that tests shouldn’t be ordered. Here are some samples found here and there:

Of course, well-written test code would not assume any order, but some do.

https://github.com/junit-team/junit4/wiki/test-execution-order

Each test runs in its own test fixture to isolate tests from the changes made by other tests. That is, tests don’t share the state of objects in the test fixture. Because the tests are isolated, they can be run in any order.

http://junit.org/junit4/faq.html=atests_2

You’ve definitely taken a wrong turn if you have to run your tests in a specific order […]

http://blog.stevensanderson.com/2009/08/24/writing-great-unit-tests-best-and-worst-practises

Always Write Isolated Test Cases

The order of execution has to be independent between test cases. This gives you the chance to rearrange the test cases in clusters (e.g. short-, long-running) and retest single test cases.

http://www.sw-engineering-candies.com/blog-1/unit-testing-best-practices=TOC-Always-Write-Isolated-Test-Cases

And this goes on ad nauseam…

In most cases, this makes perfect sense.

If I’m testing an add(int, int) method, there’s no reason why one test case should run before another.

However, this is hardly a one-size-fits-all rule.

The following use-cases take advantage of test ordering.

Tests should fail for a single reason

Let’s start with a simple example: the code consists of a controller that stores a list of x Foo entities in the HTTP request under the key bar.

The naive approach

The first approach would be to create a test method that asserts the following:

- a value is stored under the key

barin the request - the value is of type

List - the list is not empty

- the list has size x

- the list contains no

nullentities - the list contains only

Fooentities

Using AssertJ, the code looks like the following:

// 1: asserts can be chained through the API

// 2: AssertJ features can make the code less verbose

@Test

public void should_store_list_of_x_Foo_in_request_under_bar_key() {

controller.doStuff();

Object key = request.getAttribute("bar");

assertThat(key).isNotNull(); (1)

assertThat(key).isInstanceOf(List.class); (2)

List list = (List) key;

assertThat(list).isNotEmpty(); (3)

assertThat(list).hasSize(x); (4)

list.stream().forEach((Object it) -> {

assertThat(object).isNotNull(); (5)

assertThat(object).isInstanceOf(Foo.class); (6)

});

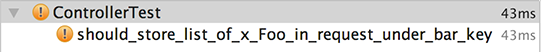

}If this test method fails, the reason can be found in any of the previous steps. A customary glance at the failure report is not enough to tell exactly which one.

To know that, one has to analyze the stack trace then the source code.

java.lang.AssertionError: Expecting actual not to be null at ControllerTest.should_store_list_of_x_Foo_in_request_under_bar_key(ControllerTest.java:31)

A test method per assertion

An alternative could be to refactor each assertion into its own test method:

@Test

public void bar_should_not_be_null() {

controller.doStuff();

Object bar = request.getAttribute("bar");

assertThat(bar).isNotNull();

}

@Test

public void bar_should_of_type_list() {

controller.doStuff();

Object bar = request.getAttribute("bar");

assertThat(bar).isInstanceOf(List.class);

}

@Test

public void list_should_not_be_empty() {

controller.doStuff();

Object bar = request.getAttribute("bar");

List<?> list = (List) bar;

assertThat(list).isNotEmpty();

}

@Test

public void list_should_be_of_size_x() {

controller.doStuff();

Object bar = request.getAttribute("bar");

List<?> list = (List) bar;

assertThat(list).hasSize(x);

}

@Test

public void instances_should_be_of_type_foo() {

controller.doStuff();

Object bar = request.getAttribute("bar");

List<?> list = (List) bar;

list.stream().forEach((Object it) -> {

assertThat(it).isNotNull();

assertThat(it).isInstanceOf(Foo.class);

});

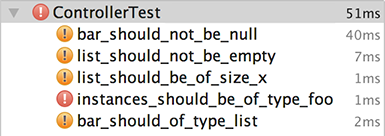

}Now, every failing test is correctly displayed.

But if the bar attribute is not found in the request, every test will still run and still fail, whereas they should merely be skipped.

Even if the waste is small, it still takes time to run unnecessary tests. Worse, it’s waste of time to analyze the cause of the failure.

A private method per assertion

It seems ordering the tests makes sense. But ordering is bad, right? Let’s try to abide by the rule, by having a single test calling private methods:

public void should_store_list_of_x_Foo_in_request_under_bar_key() {

controller.doStuff();

Object bar = request.getAttribute("bar");

bar_should_not_be_null(bar);

bar_should_of_type_list(bar);

List<?> list = (List) bar;

list_should_not_be_empty(list);

list_should_be_of_size_x(list);

instances_should_be_of_type_foo(list);

}

private void bar_should_not_be_null(Object bar) {

assertThat(bar).isNotNull();

}

private void bar_should_of_type_list(Object bar) {

assertThat(bar).isInstanceOf(List.class);

}

private void list_should_not_be_empty(List<?> list) {

assertThat(list).isNotEmpty();

}

private void list_should_be_of_size_x(List<?> list) {

assertThat(list).hasSize(x);

}

private void instances_should_be_of_type_foo(List<?> list) {

list.stream().forEach((Object it) -> {

assertThat(it).isNotNull();

assertThat(it).isInstanceOf(Foo.class);

});

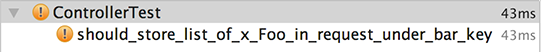

}Unfortunately, it’s back to square one: it’s not possible to just know in which step the test failed just at a glance.

At least the stack trace conveys a little more information:

java.lang.AssertionError: Expecting actual not to be null at ControllerTest.bar_should_not_be_null(ControllerTest.java:40) at ControllerTest.should_store_list_of_x_Foo_in_request_under_bar_key(ControllerTest.java:31)

How to skip unnecessary tests, and easily know the exact reason of the failure?

Ordering it is

Like it or not, there’s no way to achieve skipping and easy analysis without ordering:

// Ordering is achieved using TestNG

@Test

public void bar_should_not_be_null() {

controller.doStuff();

Object bar = request.getAttribute("bar");

assertThat(bar).isNotNull();

}

@Test(dependsOnMethods = "bar_should_not_be_null")

public void bar_should_of_type_list() {

controller.doStuff();

Object bar = request.getAttribute("bar");

assertThat(bar).isInstanceOf(List.class);

}

@Test(dependsOnMethods = "bar_should_of_type_list")

public void list_should_not_be_empty() {

controller.doStuff();

Object bar = request.getAttribute("bar");

List<?> list = (List) bar;

assertThat(list).isNotEmpty();

}

@Test(dependsOnMethods = "list_should_not_be_empty")

public void list_should_be_of_size_x() {

controller.doStuff();

Object bar = request.getAttribute("bar");

List<?> list = (List) bar;

assertThat(list).hasSize(x);

}

@Test(dependsOnMethods = "list_should_be_of_size_x")

public void instances_should_be_of_type_foo() {

controller.doStuff();

Object bar = request.getAttribute("bar");

List<?> list = (List) bar;

list.stream().forEach((Object it) -> {

assertThat(it).isNotNull();

assertThat(it).isInstanceOf(Foo.class);

});

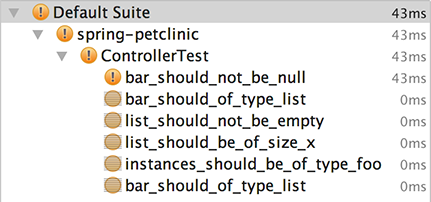

}The result is the following:

Of course, the same result is achieved when the test is run with Maven:

Tests run: 5, Failures: 1, Errors: 0, Skipped: 4, Time elapsed: 0.52 sec <<< FAILURE! bar_should_not_be_null(ControllerTest) Time elapsed: 0.037 sec <<< FAILURE! java.lang.AssertionError: Expecting actual not to be null at ControllerTest.bar_should_not_be_null(ControllerTest.java:31) Results : Failed tests: ControllerTest.bar_should_not_be_null:31 Expecting actual not to be null Tests run: 5, Failures: 1, Errors: 0, Skipped: 4

In this case, by ordering in unit test methods, one can achieve both optimization of testing time and fast failure analysis by skipping tests that are bound to fail anyway.

Unit testing and Integration testing

In my talks about Integration testing, I usually use the example of a prototype car. Unit testing is akin to testing every nut and bolt of the car, while Integration testing is like taking the prototype on a test drive.

No project manager would take the risk of sending the car on a test drive without having made sure its pieces are of good enough quality. It would be too expensive to fail just because of a faulty screw; test drives are supposed to validate higher-levels concerns, those that cannot be checked by Unit testing.

Hence, unit tests should be ran first, and only integration tests only afterwards. In that case, one can rely on the Maven Failsafe plugin to run Integration tests later in the Maven lifecycle.

Integration testing scenarios

What might be seen as a corner-case in unit-testing is widespread in integration tests, and even more so in end-to-end tests. In the latest case, an example I regularly use is the e-commerce application. Steps of a typical scenario are as follow:

- Browse the product catalog

- Put one product in the cart

- Display the summary page

- Fill in the delivery address

- Choose a payment type

- Enter payment details

- Get order confirmation

In a context with no ordering, this has several consequences:

- Step X+1 is dependent on step X e.g. to enter payment details, one must have chosen a payment type first, requiring that the latter works

- Step X+2 and X+1 both need to set up step X. This leads either to code duplication - as setup code is copied-pasted in all required steps, or common setup code - which increases maintenance cost (yes, sharing is caring but it’s also more expensive).

- The initial state of step X+1 is the final state of step X i.e. at the end of testing step X, the system is ready to start testing step X+1

- Trying to test step X+n if step X failed already is time wasted, both in terms of server execution time and and of failure analysis time. Of course, the higher n, the more waste.

This is very similar to the section above about unit tests order. Given this, it makes no doubt for me that ordering steps in an integration testing scenario is far from a bad practice but good judgement.

Conclusion

As in many cases in software development, a rule has to be contextualized. While in general, it makes no sense to have ordering between tests, there are more than a few cases where it does.

Software development is hard because the "real" stuff is not learned by sitting on universities benches but through repeated practice and experimenting under the tutorship of more senior developers. If enough more-senior-than-you devs tend to hold the same opinion on a subject, chances are you’ll take that for granted as well. At some point, one should single out of such opinion and challenge it to check whether it’s right or not in one’s own context.